The New UI Paradigm

The way people interact with software is changing. For decades, users navigated to websites, learned interfaces, and clicked through menus. Now, AI assistants are becoming the primary interface. Users describe what they want, and the AI figures out how to make it happen.

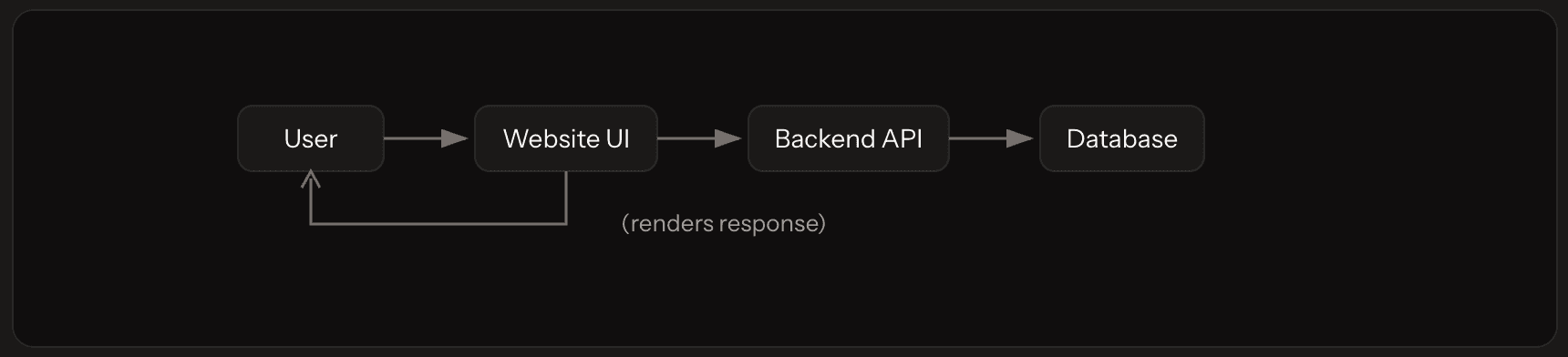

The Traditional Model

In conventional web applications:

Users learn your interface. They click buttons, fill forms, navigate menus. The UI is the contract between your service and the user.

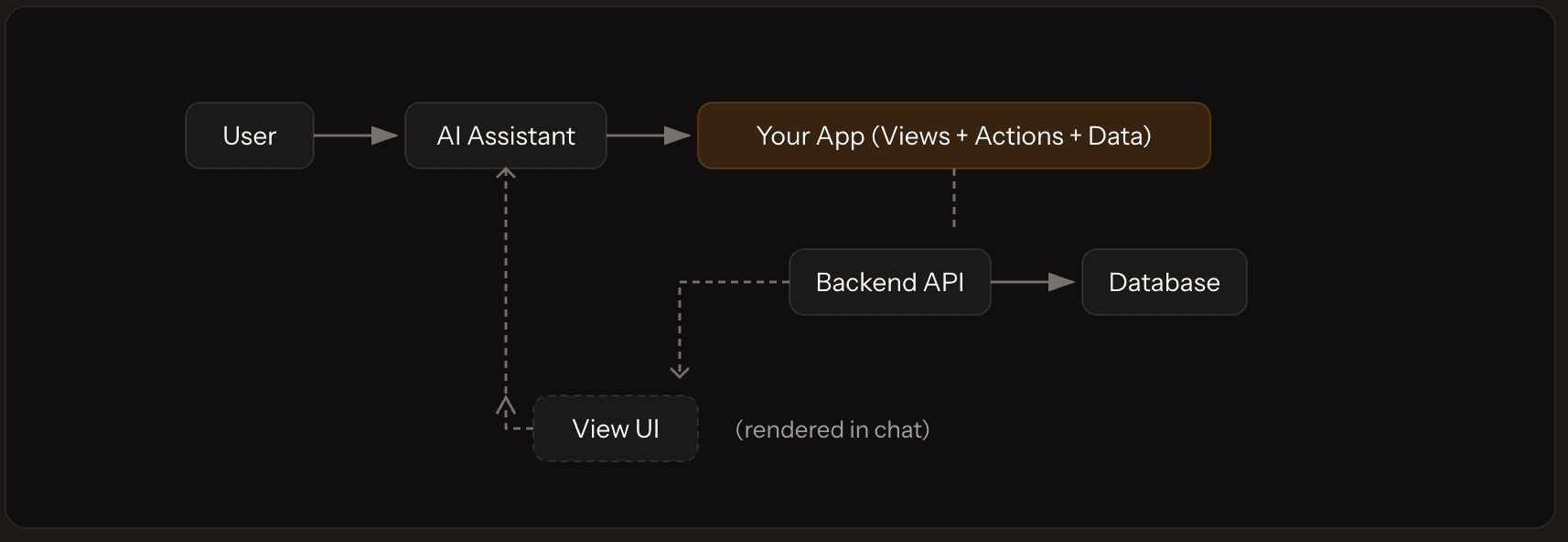

The New Model

With AI agents becoming primary interfaces:

The AI becomes the orchestrator. It interprets user intent, decides which tools to call, and determines when to show a UI. Your interface appears contextually, when needed.

What Changes for Developers

1. Intent Over Navigation

Users don't click through menus. They express intent.

Instead of: Click "Flights" → "Search" → Enter details

Users say: "Find me flights to Tokyo next week"

The AI interprets this and calls your tools with extracted parameters.

2. Contextual UI

Your UI appears only when needed. Instead of showing everything at once, the AI surfaces the right interface at the right moment.

| Traditional | New Paradigm |

|---|---|

| User navigates to dashboard | AI shows data on demand |

| Full-page forms | Focused views with relevant fields |

| Static navigation | Contextual tool invocation |

| User learns your interface | AI learns user intent |

3. Bidirectional Communication

Views can communicate back to the AI:

function FlightView() {

const { data } = useViewParams();

const { say } = useNavigation();

const { dispatch } = useAction();

const handleBook = async (flight) => {

await dispatch('bookFlight', { flightId: flight.id });

say('Flight booked! Now help me find hotels nearby.');

};

return <FlightList flights={data.flights} onBook={handleBook} />;

}4. Multi-Step Workflows Become Natural

Complex workflows that required careful UX design (wizards, multi-page checkouts) become natural conversations. The AI maintains context while your views handle specific interactions.

Why This Matters

Massive Distribution

AI assistants have hundreds of millions of users. Build once, reach them all.

Lower Friction

No app discovery, no downloads, no learning curve. Users just ask.

Contextual Value

Your UI appears when needed, pre-loaded with relevant data.

Compound Workflows

Users combine multiple apps in a single conversation.

The New Mental Model

Think of your application not as a destination, but as a capability the AI can invoke.

| Traditional Thinking | New Thinking |

|---|---|

| Building complete experiences | Building focused capabilities |

| Designing user flows | Designing for AI understanding |

| Creating navigation | Writing clear descriptions |

| Handling all states | Handling the moment of need |

Where Pancake Fits

This paradigm shift brings a challenge: different AI hosts implement these patterns differently. Claude uses MCP. ChatGPT uses Actions. Future platforms will have their own protocols.

Pancake abstracts these differences:

// Define once

const app = createApp({

name: 'my-app',

version: '1.0.0',

views: {

search: defineView({

description: 'Search and display results',

input: z.object({ query: z.string() }),

handler: async ({ query }) => searchDatabase(query),

ui: { html: './src/views/search.html' },

}),

},

});

// Works everywhere

// → Claude Desktop (MCP)

// → ChatGPT (Actions)

// → Any MCP-compatible clientGetting Started

Ready to build for this new paradigm?